8 Classical Sorting Algorithms

Contents

In this article, I’ll review the following 8 classical sorting algorithms, and implement them in Go:

- bubble sort

- selection sort

- insertion sort

- shell sort

- merge sort

- quick sort

- heap sort

- radix sort

These basic sorting algorithms are sometimes favored by interviewers, and the ideas of these classical sorting algorithms(like merge sort, quick sort, heap sort, etc.) are sometimes the key points of algorithm problems, so it’s worth taking time on it.

Highly inefficient sorts

Bubble sort

Bubble sort is simple but highly inefficient, so it’s rarely used in practice, unless in situation where inputs data is very small or nearly sorted.

The algorithms is to compare adjsent two numbers, swap them if first is larger than second, from beginning to end. Every time the biggest so far will pop to the right, and every time it takes O(n) time complexity and it needs n times, so the time complexity is O(n2).

|

|

Simple sorts

Two of the simplest sorts are insertion sort and selection sort, both of which are efficient on small data.

Selection sort

Selection sort is very simple:

- find the minimum in the list

- swap with the i index

Its time complexity is O(n2).

|

|

Insertion sort

Insertion sort is used in cases where the input data is small or partly sorted. The idea is to pick one from unsorted list and insert into sorted part.

Its time complexity is O(n2).

|

|

Shell sort

Shell sort improves upon insertion sort by moving out of order elements more than one position at a time. It will separate data by gap and sort each group by insertion sort, and continously decrease gap until it reaches 1, in that case it becomes insertion sort completely.

|

|

Efficient sorts

Heap sort

Heapsort is a much more efficient version of selection sort. It also works by determining the largest (or smallest) element of the list, placing that at the end (or beginning) of the list, then continuing with the rest of the list, but accomplishes this task efficiently by using a data structure called a heap, a special type of binary tree.

Using the heap, finding the next largest element takes O(log n) time, instead of O(n) for a linear scan as in simple selection sort. This allows Heapsort to run in O(n log n) time, and this is also the worst case complexity.

|

|

Merge sort

The concept of merge sort: Divide and Conquer is widely used for solving other questions. Basically it works as follows:

- If the length of list is smaller or equal than 1, it’s sorted

- Divide the list to two sublist and sort them recursively

- Merge two sorted list into one

Its time complexity is O(n2), and it needs O(n) space.

|

|

Quick sort

Quicksort is a divide and conquer algorithm which relies on a partition operation: to partition an array, an element called a pivot is selected. All elements smaller than the pivot are moved before it and all greater elements are moved after it. This can be done efficiently in linear time and in-place. The lesser and greater sublists are then recursively sorted. This yields average time complexity of O(n log n), with low overhead, and thus this is a popular algorithm.

|

|

Non-comparison sorts

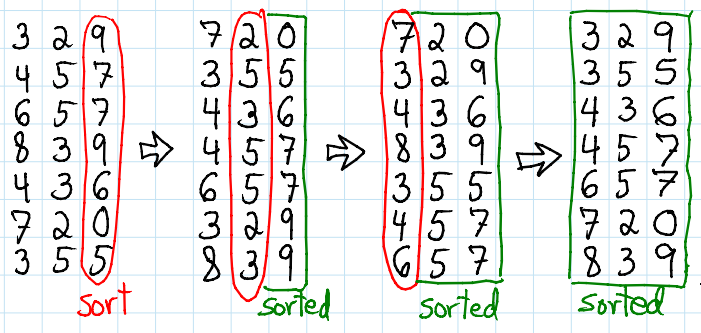

LSD Radix sort

Radix sort is an integer sorting algorithm that sorts data with integer keys by grouping the keys by individual digits that share the same significant position and value (place value).

Radix sort uses counting sort as a subroutine to sort an array of numbers.

|

|

References

Author Wenfeng

LastMod 2019-09-29